OPERATION STARFALL

About Feature

Operation Starfall is a 2D local co-op Metroidvania inspired by 1980s cartoons.

This prototype explored a Fog of War minimap system, using shaders and RenderTextures to reveal explored areas.

This is a technical project — see the Glossary if any terms are unfamiliar.

My Features

Intro

This prototype explored Fog of War using shaders and RenderTextures. It didn’t ship, but it taught me valuable GPU draw logic and laid the groundwork for later teams.

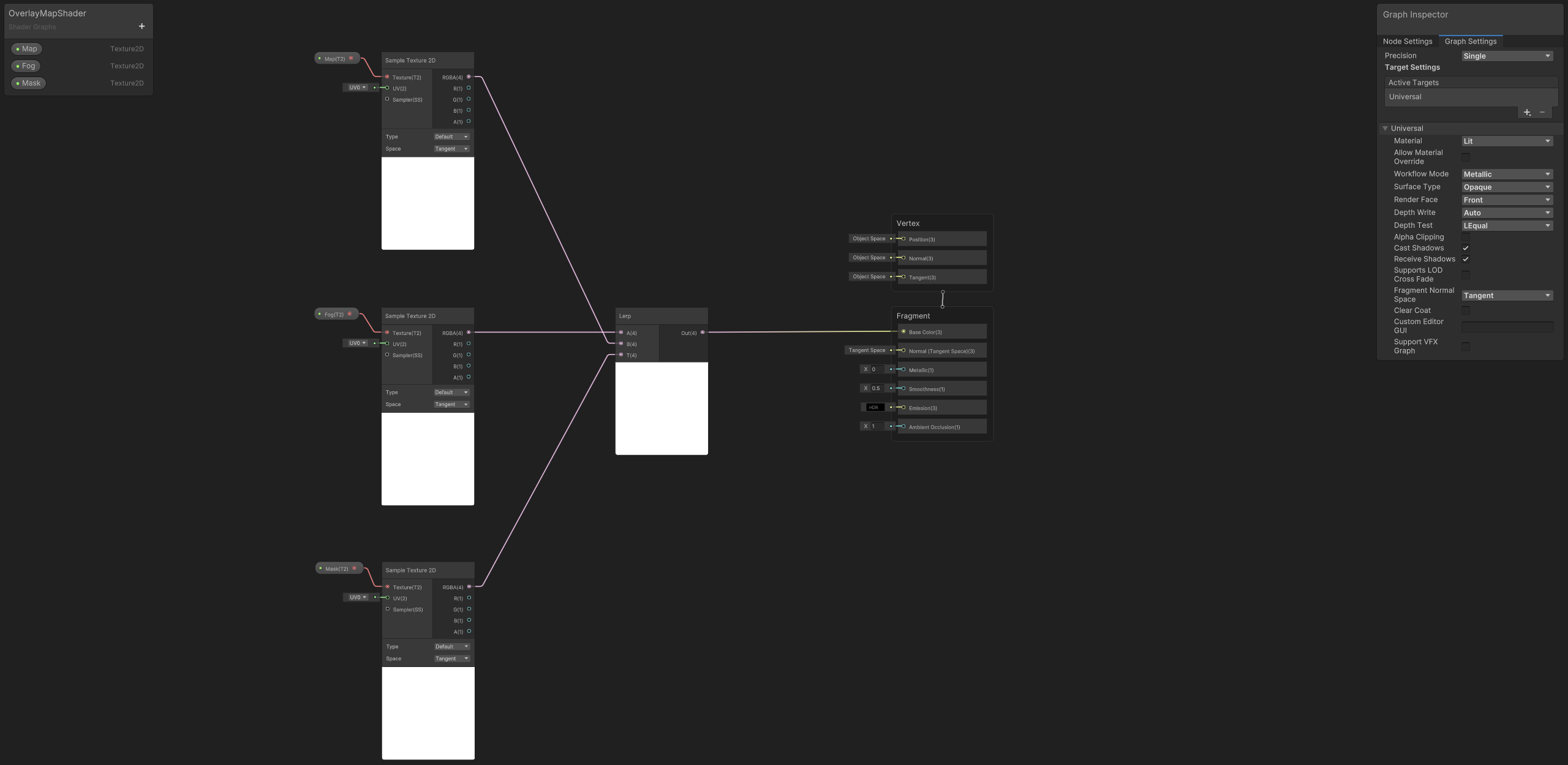

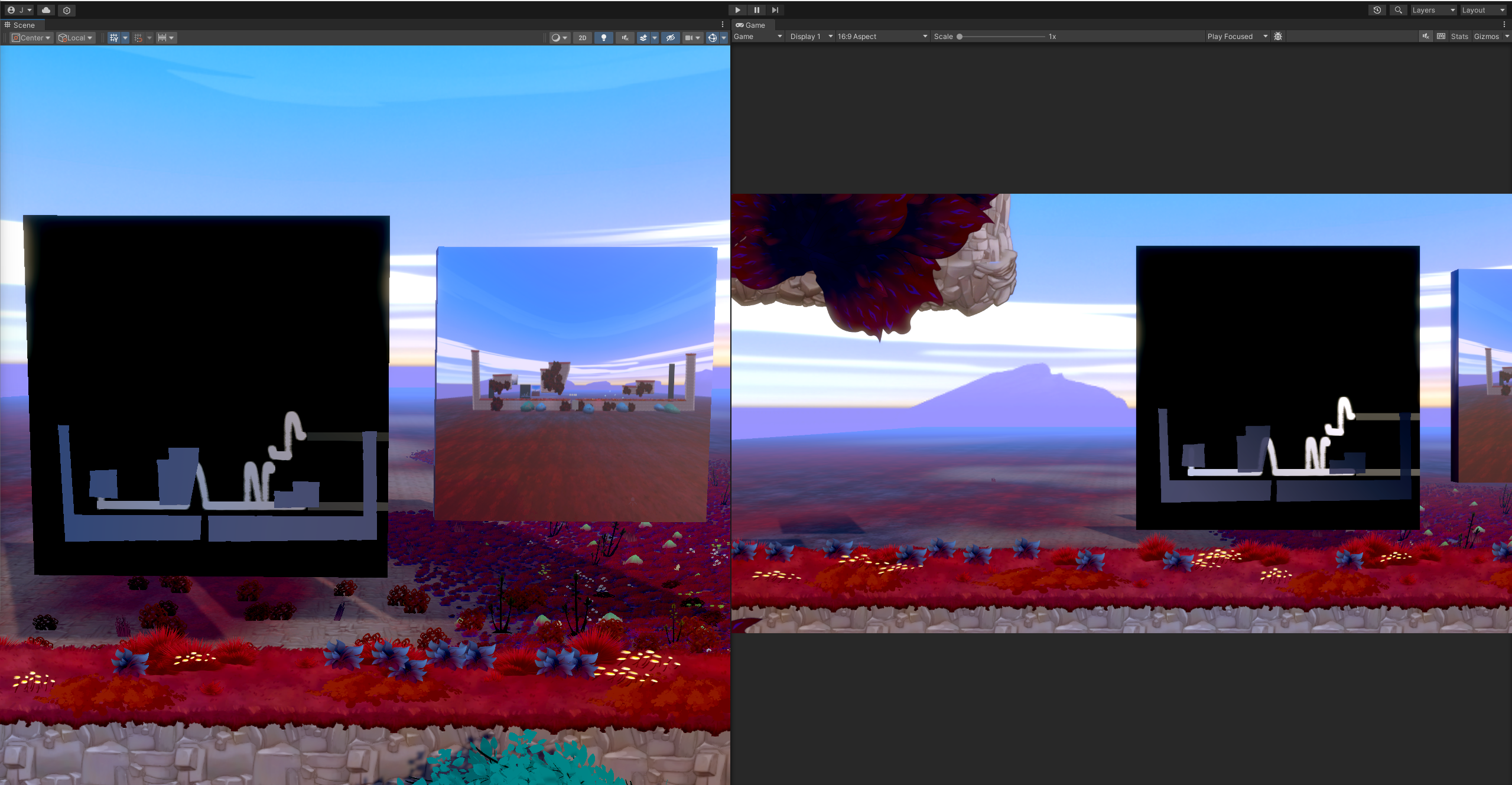

Fog of War ShaderGraph:

The shader uses a basic Lerp to blend three layers:

base map, fog, and player mask — revealing explored areas in real time.

Development

During early brainstorming, we explored several ways to create the minimap.

I suggested using a secondary camera to render the entire level, and began experimenting with a technique I had just discovered: Blitting — a way to quickly copy pixel data from one texture to another, like taking a low-cost screenshot.

I first attempted camera blitting with limited success, which pushed me to study Unity’s rendering pipeline more closely.

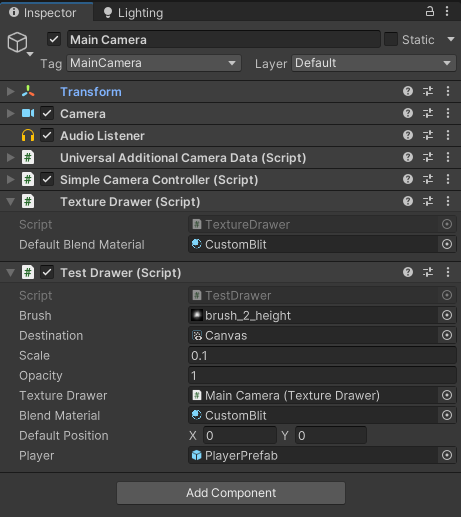

Two classes attached to the camera:

After a few failed attempts, we reached out to our technical director for guidance. I explained I was struggling to blit the full level through the camera.

Function derived from the original Texture Drawer class:

The original TextureDrawer class was provided by

Berend Weij,

the Technical Director.

It was originally used in a VR project and later adapted for this prototype.

From that point, I created a new class built on the original TextureDrawer logic.

This class utilized a brush texture and blend shader to paint directly onto a RenderTexture canvas.

By using a custom blit shader and a simple one-line call to draw the brush onto the destination texture, I was able to reproduce the behavior I originally intended with camera blitting— only with more control and better performance.

Manual drawing in action:

Since the goal was to clear fog dynamically as the player moved, I linked the draw position directly to the player’s world position.

The Custom Blit shader converts vertex positions from object space to clip space, ensuring the brush only draws within the bounds of its target texture. This gave the system the spatial accuracy I was aiming for.

Drawn texture result:

Thanks to this setup, the texture state persists between play sessions. Even after restarting the game, the drawn path remains and continues updating in real time.

Conclusion

Though unfinished, this prototype introduced me to Unity’s rendering pipeline and GPU-accelerated systems. I built a dynamic drawing system that revealed map areas in real-time, which later teams could build upon.

Player path drawing in action:

The brush draws the player’s trail dynamically in real-time using GPU memory. Source code: GitHub

Glossary

- Render: Drawing images to the screen or a texture.

- Texture: An image stored in memory, used in shaders or as surfaces.

- Shader: A small GPU program that controls how things are drawn.

- Blit: Copying pixel data from one texture to another.

- RenderTexture: A special texture Unity can draw onto in real time.